When we tested AI copy checkers back in September 2023, the results weren’t pretty. Human writing was flagged as robotic. AI writing slipped through undetected. Different platforms gave wildly different verdicts on the same text.

Two years on, we did another experiment. Same principle: two pieces of AI-generated copy and one human-written piece, tested across ten of the most widely used detectors.

This time, the results were striking.

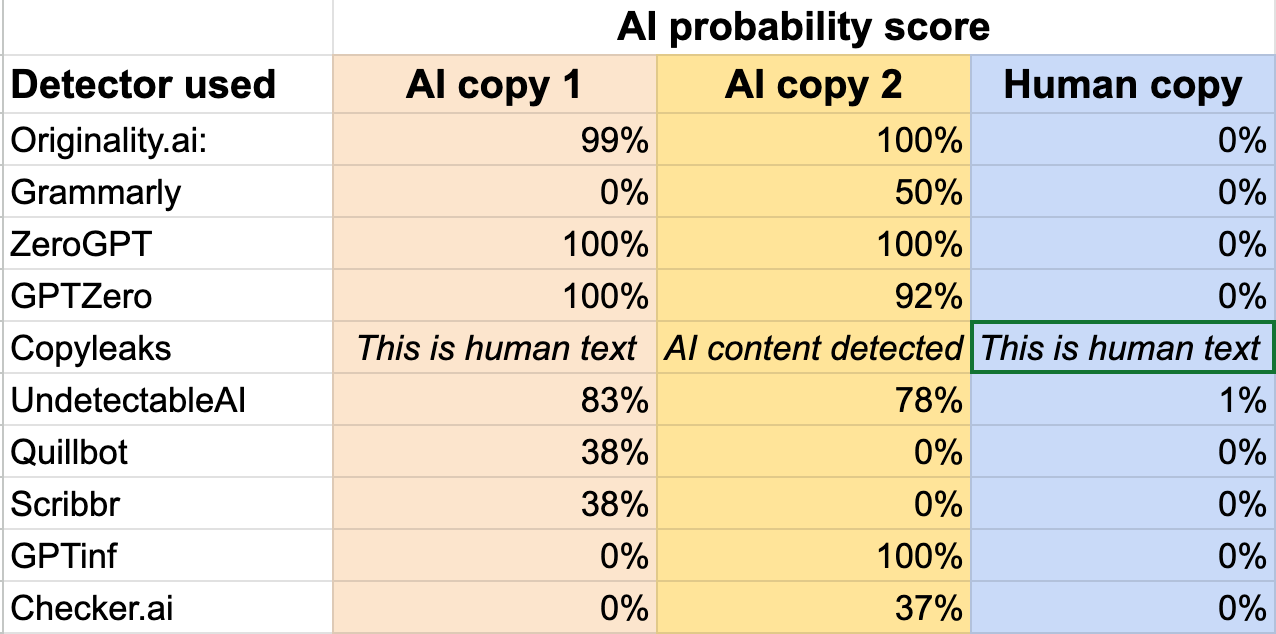

You can see them outlined in the chart below:

The percentages expressed here are essentially probability calculations. The closer to 100%, the more likely the tool considers the copy to have been generated automatically.

The exception to this is Copyleaks which simply states whether it considers the copy to have been generated by AI or a human writer.

It’s important to note that we only ran three pieces through the detectors this time – fewer than our test in 2023.

Both AI pieces were flagged at 100% AI.

The human piece came back at 0% AI.

That’s as clear and accurate as it gets, and a major step forward compared with 2023’s muddle.

It scored the AI pieces at 99% and 100% AI.

The human piece came back at 0% AI.

Not perfect, but about as good as most brands could reasonably hope for.

GPTZero rated the AI texts at 100% and 92% AI, while giving the human piece 0% AI.

UndetectableAI scored the AI samples at 83% and 78% AI, with the human text at just 1% AI.

Neither flawless, but both producing results that feel broadly reliable.

Copyleaks correctly identified one of the two AI pieces as well as the human piece.

Grammarly marked one AI text at 0% AI and the other at 50% AI, while accurately rating the human piece at 0% AI.

Quillbot, Scribbr, GPTinf and Checker.ai varied in their results, with confidence scores dipping as low as 37%.

However, none of them falsely flagged the human-written copy as AI generated.

First, progress is tangible. ZeroGPT was flawless, and Originality.ai, GPTZero and UndetectableAI showed marked improvement. This is a big step up from two years ago.

Second, inconsistency is still common. Copyleaks’ contradictory verdicts and Grammarly’s uneven scoring remind us that not all detectors are keeping pace.

Third, detectors remain guides, not judges. Even the best results can’t replace human oversight. Tools can indicate probability, but they can’t measure quality, intent or brand fit.

For clients, it’s reassurance that they’re getting what they paid for. For writers, it’s about protecting credibility from unfair “AI” labels. For agencies, it’s about providing clarity where uncertainty breeds mistrust.

Back in our September 2023 blog, we noted that detectors rely on proxies like perplexity and burstiness – statistical markers that don’t fully capture what makes writing human. That remains true today. But our latest tests show that some platforms are starting to get much closer.

So, are AI detectors getting better?

The answer is yes – some of them are. Which means the safest bet remains unchanged: trust the writers you know, judge copy by the value it delivers, and treat detectors as advisory rather than definitive.