The rise of genAI platforms has been mirrored by a proliferation of AI detection tools. Every now and then we test them out, and without fail, the results are concerning.

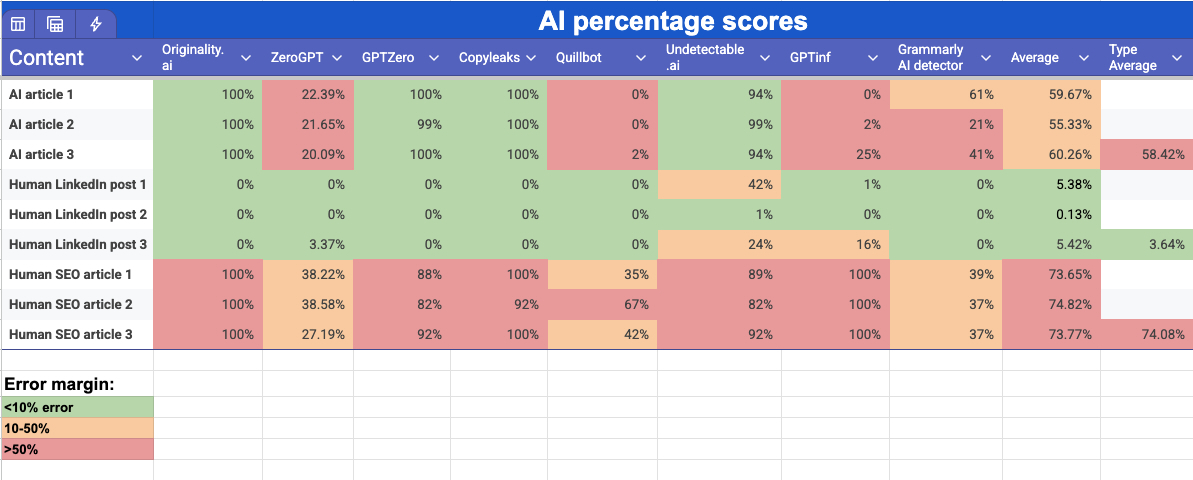

In our latest tests last week we ran nine content pieces through eight popular detectors: Originality.ai, ZeroGPT, GPTZero, Copyleaks, Qullbot, Undetectable.ai, GPTinf, and Grammarly AI Detector.

Three of the nine content pieces were AI-written articles and the other six were human-written. Of those six, three were short, hastily composed LinkedIn posts. The other three were long, instructional SEO articles, all produced for clients this year.

Each of the detectors gives an AI percentage score. Crucially, these aren’t meant to indicate how much of the content is human- or AI-written. Rather, they’re supposed to reflect the platforms’ confidence in whether each item is human- or AI-written.

As the results table below shows, while our sample size was relatively small, the takeaway was quite clear: all the detectors have weaknesses, some of them extreme. A number were unreliable in both directions, i.e. they failed to spot AI content and they flagged human writing as AI.

Across all human-written content, the average AI detection score was 43.19%. In other words, relying on these tools as proof of authorship isn’t just risky, it’s a gamble.

Remarkably, across all eight detectors, the three articles gained an average AI likelihood score of 74.8%, compared with only 58.42% for the AI-written ones. Only the human-written LinkedIn posts gained a score that was anything close to reality, at 3.64%.

For the three fully AI-generated articles, some tools performed as advertised. Originality.ai, GPTZero and Copyleaks consistently returned 100% AI scores. Others, however, fell well short. ZeroGPT averaged just 21% on AI content, while Quillbot and GPTinf returned scores as low as 0% and 2% on individual AI articles.

When it came to evaluating the human-written SEO articles, the three top performers fell off a cliff. Originality.ai scored all three pieces as 100% AI, while Copyleaks scored two at 100% and one at 92%, and GPTZero scored them at 82%, 88% and 92%.

These results – coupled with the three articles’ high average AI score – should concern, not only copywriters and content agencies, but also their clients.

What could account for the false readings? It seems that the detectors weren’t reacting to who wrote the content so much as how it was written.

Structure appears to be key.

Whereas the LinkedIn posts were loose, conversational and uneven in rhythm – what we might call freestyle jottings – the SEO articles were more controlled. In particular they had short paragraphs, keyword-dense sections, predictable formatting and clear informational flow. Ironically, that’s exactly what good SEO writing has looked like for years. It’s also what LLMs are trained to produce.

This is where AI detection starts to collapse under its own logic. It’s well-known that detectors lean heavily on signals such as predictability, repetition and statistical smoothness. Formulaic structure trips those alarms. As a result, highly disciplined human writing is often mistaken for AI, while genuinely AI-generated content can slip through if it’s been lightly edited or prompted creatively.

The implication is uncomfortable: detection tools aren’t measuring authorship. Rather, like LLMs, they’re looking for patterns. The problem for professional SEO writers is that these are the patterns they have been taught to follow.

AI detection isn’t useless, but it’s not authoritative either. Used as a rough signal it can prompt review or discussion. Used as a gatekeeper or proof mechanism, it can be deeply flawed.

Until detection tools can reliably distinguish between structured human craft and machine-generated text, they should never be treated as judges. At best, they’re advisory. At worst, they undermine trust in the very people they’re meant to protect.

The real safeguard against bad content remains unchanged: skilled writers, clear editorial strategy and a focus on value, not just patterns.